Sen2agri system released

After 3 years of development, we are very happy to share the news of Sen2Agri system release. Sen2Agri system is […]

After 3 years of development, we are very happy to share the news of Sen2Agri system release. Sen2Agri system is […]

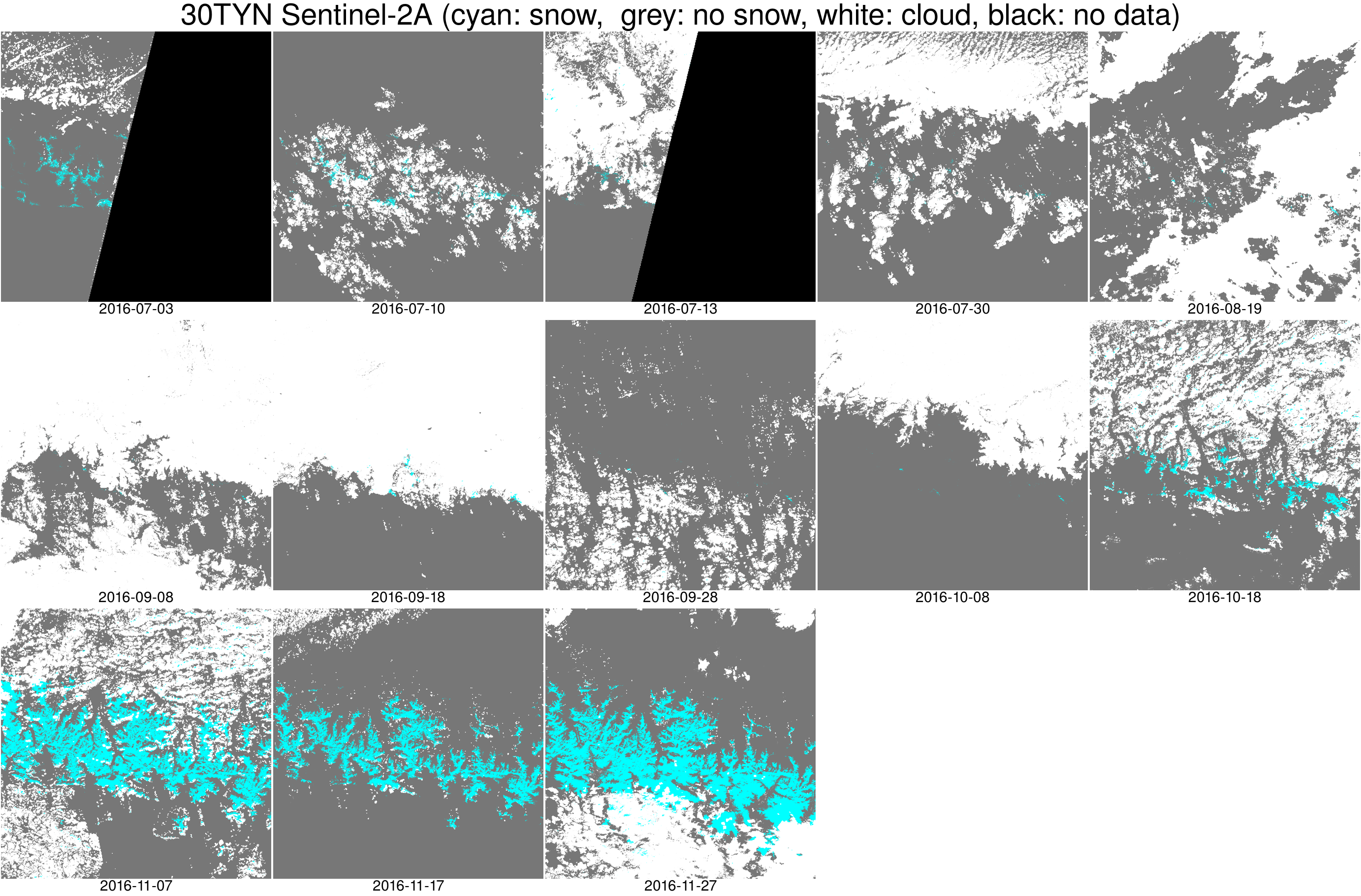

We are pleased to announce that Theia has put online 93 Sentinel-2 snow products, corresponding to 6 tiles in the […]

=>THEIA passed two new milestones in this beginning of summer : The number of Sentinel-2A Level2A products just passed the […]

In the framework of the Pyrenees Climate Change Observatory (OPCC) the Cesbio is contributing to the analysis of the snow […]

After having written rather bad news from MUSCATE for quite a long time, I am very happy to send good […]

The animation below shows the evolution of Thwaites glacier eastern ice shelf in West Antarctica, from July 2016 to May […]

Theia’s Sentinel-2 L2A counter passed the 15 000 threshold on the 26th of May. The joined image shows the zones […]

=> The Sentinel-2B satellite was launched in March 2017, and joined his twin brother Sentinel-2A, which was orbiting since June 2015. […]

Here are the sites that will be observed by the long awaited French-Israeli satellite Venµs. Venµs will be launched this […]

In this post « Landslides in Kyrgyzstan captured by Sentinel-2 » I showed two landslides that I identified as the Kurbu and […]