Sen2Agri final version was just published

The Sen2Agri system was just released in version 2.0. As the project is now terminated and will not receive more […]

The Sen2Agri system was just released in version 2.0. As the project is now terminated and will not receive more […]

Recently I generated one year of snow maps from Sentinel-2 in the Canadian Rockies for a talented colleague who is […]

Marie Ballère started in October 2018 a Ph.D. funded by WWF and CNES. The aim of her Ph.D. is to […]

=> The combined use of VENμS, Sentinel-2 and Landsat-8 data can increase the likelihood of obtaining cloud-free images or may […]

=>L’utilisation combinée des données de VENµS, Sentinel-2 et Landsat-8 peut permettre d’augmenter la probabilité d’obtenir des images sans nuage ou […]

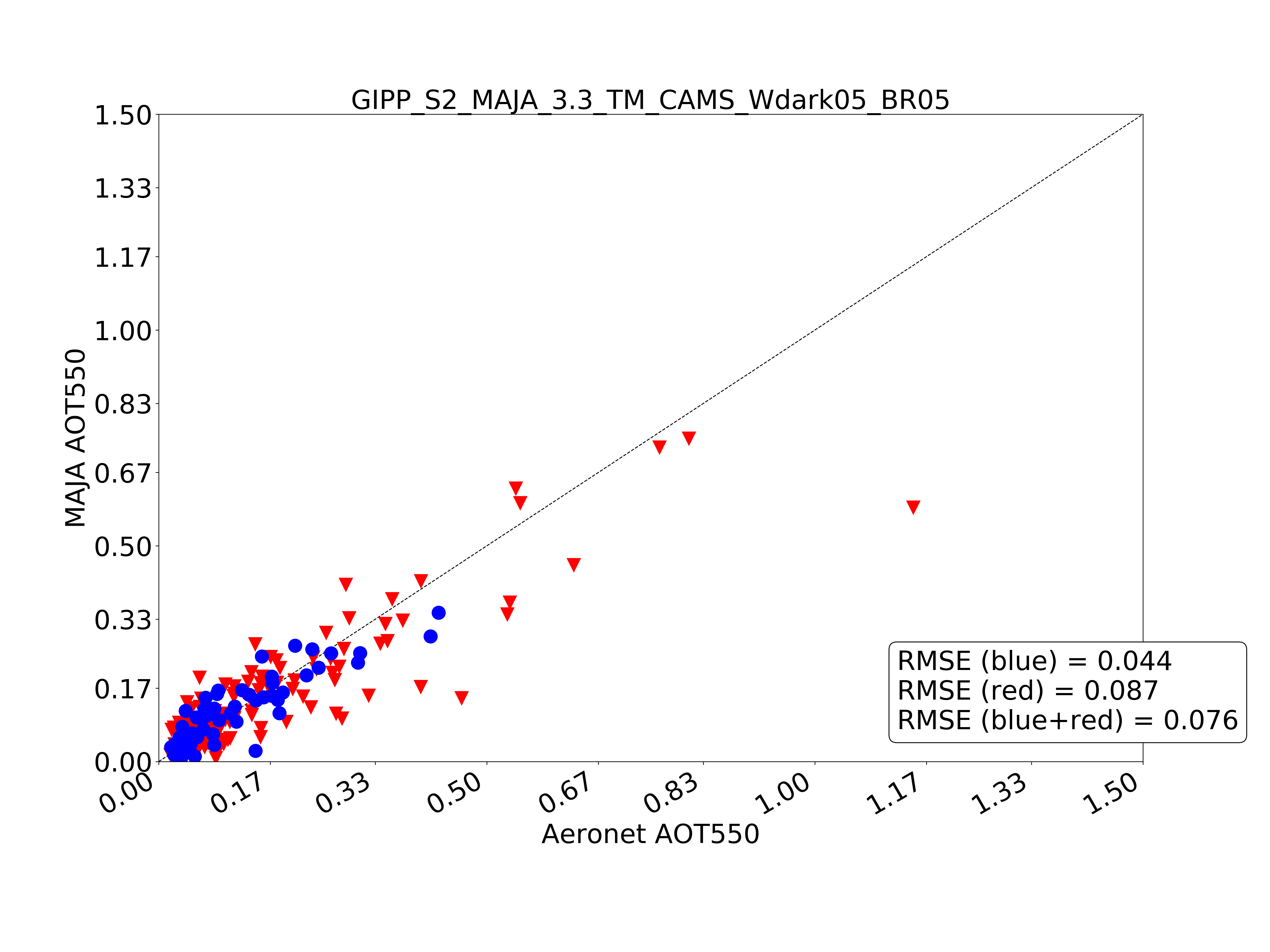

What’s new ? Pfew ! It has been quite long, but MAJA 3.3 is available, and it improves a LOT […]

From Mont Valier to la Roca Foradada, on April 30 (left) and May 6th, 2019(right) 3D View : download KMZ […]

Du Mont Valier à la Roca Foradada, le 30 avril (à gauche) et le 6 mai 2019 (à droite) Visualisation […]

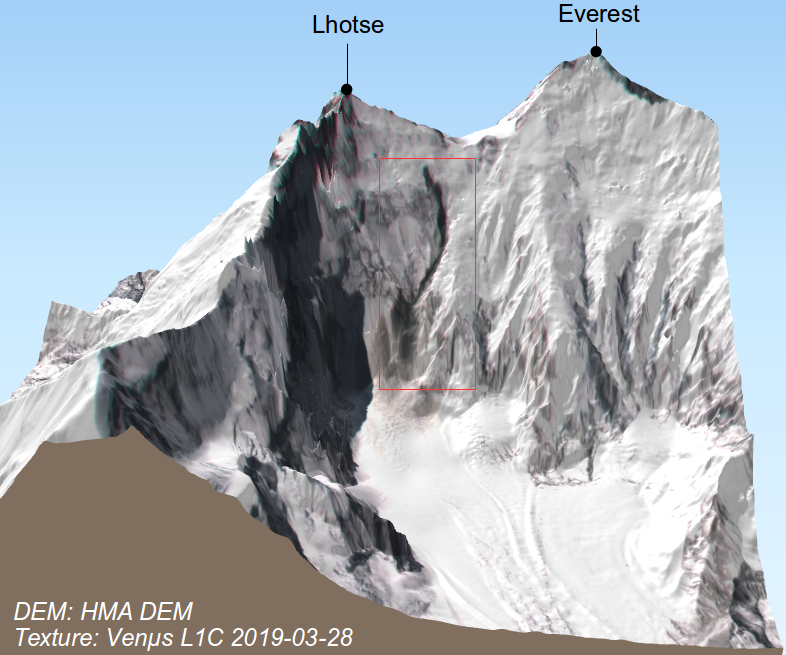

I noted a curious dark trace on the steep slope below South Col between Mount Everest and Lhotse in this […]